Knowledge Base

About 1502 wordsAbout 5 min

You can quickly create an enterprise or personal knowledge base using a code repository. By uploading documents to the repository and configuring the relevant pipeline, the document content can be automatically processed by a large model and uploaded to the knowledge base. This enables use cases such as page Q&A and Open API, and can be used to quickly build RAG (Retrieval-Augmented Generation) applications.

Knowledge Preparation

Understanding the RAG Application Workflow

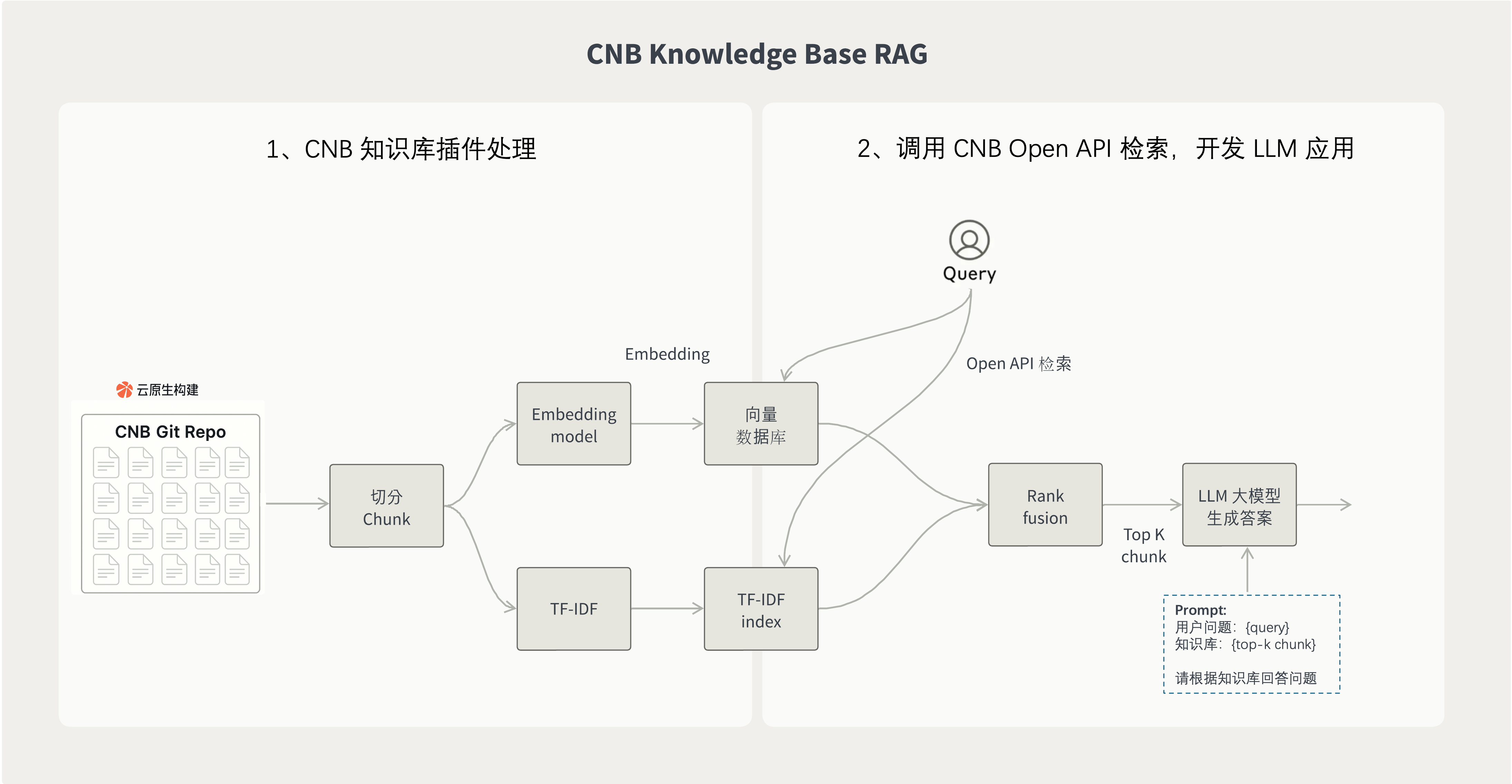

The diagram below illustrates the 2-step process of building a RAG application using CNB's knowledge base plugin.

1. Import Repository Documents into the Knowledge Base Using the Plugin

Use CNB's knowledge base plugin to import repository documents into CNB's knowledge base. The plugin runs in a cloud-native build environment and automatically handles tasks such as document chunking, tokenization, and vectorization. Once the knowledge base is built, it can be used by downstream LLM applications.

2. Develop LLM Applications Using CNB Open API for Retrieval

After the knowledge base is built, use CNB's Open API for retrieval and combine it with an LLM model to generate responses.

A typical RAG application workflow is as follows:

User asks a question.

Understand the user's question and use a query to retrieve from the knowledge base. As mentioned above, use CNB's Open API to retrieve relevant document snippets.

After retrieving results from the CNB knowledge base, construct a prompt by combining the question and knowledge context. For example, the combined prompt might look like this:

User Question: {User Question} Knowledge Base: {Retrieved Content from Knowledge Base} Please answer the user's question based on the knowledge base.Send the combined prompt to the LLM model to generate a response and return it to the user.

Usage Instructions

Step 1: Configure the Pipeline to Use the Knowledge Base Plugin

Plugin Image Name: cnbcool/knowledge-base

Configure the pipeline in the repository's .cnb.yml file to use the knowledge base plugin. As shown in the configuration below, when code is pushed to the main branch, the pipeline will be triggered. It will automatically use the knowledge base plugin to process Markdown files through chunking, tokenization, vectorization, etc., and upload the processed content to CNB's knowledge base.

main:

push:

- stages:

- name: build knowledge base

image: cnbcool/knowledge-base

settings:

include:

- docs/*.md

- docs/*.txtSome plugin parameters are explained below. For more details, refer to the cnbcool/knowledge-base plugin documentation.

include: Specifies the files to include, using glob patterns. Default is*to include all files. Supports arrays or comma-separated values.exclude: Specifies the files to exclude, using glob patterns. Default is to exclude nothing. Supports arrays or comma-separated values.chunk_size: Specifies the text chunk size. Default is 1500.chunk_overlap: Specifies the number of overlapping tokens between adjacent chunks. Default is 0.embedding_model: Specifies the embedding model. Default ishunyuan. Currently, onlyhunyuanis supported.

Alternatively, you can use the following command in a cloud-native development environment. Note that Docker-in-Docker (DinD) service must be enabled. It is enabled by default in the development environment. For custom development environments, refer to service-docker.

docker run --rm \

-v "$(pwd):$(pwd)" \

-w "$(pwd)" \

-e CNB_TOKEN=$CNB_TOKEN \

-e CNB_REPO_SLUG_LOWERCASE=$CNB_REPO_SLUG_LOWERCASE \

-e PLUGIN_INCLUDE="docs/*.md,docs/*.txt,docs/*.pdf" \

cnbcool/knowledge-baseStep 2: Using the Knowledge Base

Once the knowledge base is built, you can query and retrieve content from the repository's knowledge base using the Open API. The retrieved content can be combined with an LLM model to generate responses.

Before starting, read: CNB Open API Usage Guide. The access token requires the repo-code:r (repository read) permission.

Note: Replace {slug} with the repository slug. For example, the repository URL for CNB's official documentation knowledge base is https://cnb.cool/cnb/feedback, so {slug} would be cnb/feedback.

API Information

- URL:

https://api.cnb.cool/{slug}/-/knowledge/base/query - Method: POST

- Content Type: application/json

Request Parameters

The request body should be in JSON format and include the following fields:

query:String, required, the keyword or question to query.top_k:Number, default5, the maximum number of results to return.score_threshold:Number, default0, the relevance score threshold for matches.

Example:

{

"query": "Workspace configuration for custom buttons"

}Response Content

The response is in JSON format and includes an array of results. Each result contains the following fields:

score:Number, the relevance score, ranging from 0 to 1. Higher values indicate better matches.chunk:String, the matched knowledge base content text.metadata:Object, the content metadata.

Details of the metadata field:

hash:String, the unique hash of the content.name:String, the document name.path:String, the document path.position:Number, the position of the content in the original document.score:Number, the relevance score. Higher values indicate better matches.type:String, the content type, such ascodeorissue.url:String, the content URL.

Response Example:

[

{

"score": 0.8671732,

"chunk": "This cloud-native remote development solution is based on Docker...",

"metadata": {

"hash": "15f7a1fc4420cbe9d81a946c9fc88814",

"name": "vscode",

"path": "docs/vscode.md",

"position": 0,

"score": 0.8671732,

"type": "code",

"url": "https://cnb.cool/cnb/docs/-/blob/3f58dbaa70ff5e5be56ca219150abe8de9f64158/docs/vscode.md"

}

}

]cURL Request Example:

curl -X "POST" "https://api.cnb.cool/cnb/feedback/-/knowledge/base/query" \

-H "accept: application/json" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer ${token}" \

-d '{

"query": "Workspace configuration for custom buttons"

}'The retrieved content can be combined with an LLM model to generate responses.

RAG Mini Application Example

For example, here is a simple RAG application implemented in JavaScript:

import OpenAI from 'openai';

// Configuration

const CNB_TOKEN = 'your-cnb-token'; // Replace with your CNB access token, requires `repo-code:r` permission

const OPENAI_API_KEY = 'your-openai-api-key'; // Replace with your OpenAI API key

const OPENAI_BASE_URL = 'https://api.openai.com/v1'; // Or your proxy URL

const REPO_SLUG = 'cnb/feedback'; // Replace with your repository slug

// Initialize OpenAI client

const openai = new OpenAI({

apiKey: OPENAI_API_KEY,

baseURL: OPENAI_BASE_URL

});

async function simpleRAG(question) {

// 1. Query CNB knowledge base

const response = await fetch(`https://api.cnb.cool/${REPO_SLUG}/-/knowledge/base/query`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${CNB_TOKEN}`

},

body: JSON.stringify({ query: question })

});

const knowledgeResults = await response.json();

// 2. Extract knowledge content (here, assume all results are taken)

const knowledge = knowledgeResults

.map(item => item.chunk)

.join('\n\n');

// 3. Call OpenAI to generate a response

const completion = await openai.chat.completions.create({

model: "gpt-4.1-2025-04-14",

messages: [

{

role: "user",

content: `Question: ${question}\n\nKnowledge Base: ${knowledge}\n\nPlease answer the question based on the knowledge base.`,

},

],

});

return completion.choices[0].message.content;

}

// Usage Example

const answer = await simpleRAG("How to develop a plugin?");

// Output the response combined with the knowledge base

console.log(answer);Enabling the Knowledge Base in AI Conversations

Once the knowledge base is built, you can enable it in the AI conversation feature on the repository page, allowing the AI to conduct conversations based on the repository's document content.

You can also customize the knowledge base through the .cnb/settings.yml UI Customization Configuration File, including importing configurations from other repositories, role-playing, customizing knowledge base button styles, and more.

Importing Configurations from Other Repositories

Reference knowledge base content from other repositories in knowledge base conversations to enable cross-repository knowledge retrieval. For example, when a project depends on documents from other repositories, their knowledge bases can be imported as well.

For specific configuration methods, refer to the knowledgeBase.imports.list section in the UI Customization Configuration File.

Defining AI Role Systems

Create different AI roles, each with a specific identity and response style. For example:

- Junior Engineer: Explains concepts in simple terms.

- Mid-Level Engineer: Provides professional technical answers.

- Senior Engineer: Solves complex technical issues, offers architectural design, and provides in-depth insights.

For specific configuration methods, refer to the knowledgeBase.roles section in the UI Customization Configuration File.

Customizing Knowledge Base Button Styles

Customize the name, description, and hover effect images of the knowledge base entry button to enhance the visual experience and add more fun.

For specific configuration methods, refer to the knowledgeBase.button section in the UI Customization Configuration File.

Setting Default Repository and Default Role Options

Configure the default selected repository and default AI role in the knowledge base pop-up to improve user experience and reduce selection steps for each operation.

For specific configuration methods, refer to the knowledgeBase.defaultRepo and knowledgeBase.defaultRole sections in the UI Customization Configuration File.

Mentioning AI Roles in Comments

In the default branch of the AI role's repository, you can define the pipeline configuration triggered when the AI role is mentioned (@) in the .cnb.yml file.

In Issues or PR comments of other repositories, you can mention (@) AI roles defined in the knowledge base. This will trigger an NPC event, and CNB will reference the aforementioned .cnb.yml file as the pipeline configuration for the current NPC event.

For more details, please refer to NPC Events.